Neuroscope

About the project

A collaboration between Victor Becerra, Julia Downes, Mark Hammond, David Muth, Slawomir Jaroslaw Nasuto, Kevin Warwick, Ben Whalley and Dimitris Xydas - researchers and doctoral students based at the Reading School of Pharmacy and at Cybernetics - and Elio Caccavale and David Muth.

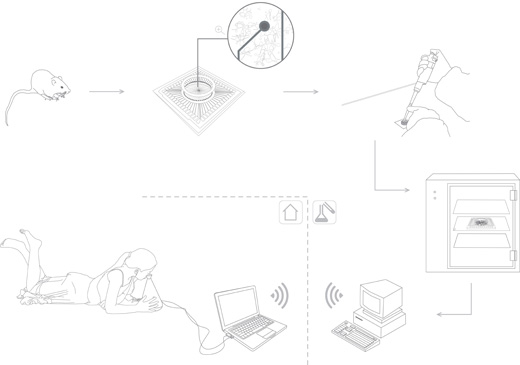

Neuroscope situated features of research from Reading in a domestic product, provoking questions about linking objects in the home to material in the lab.

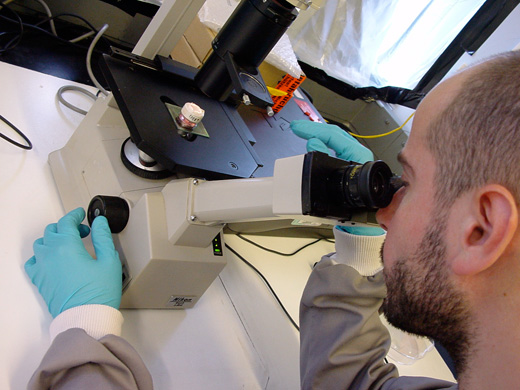

above: Using a microscope to see a culture of cells, and looking into Neuroscope

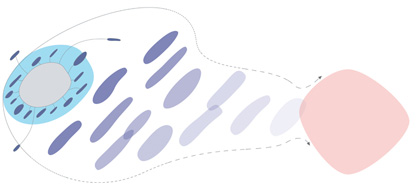

Neuroscope provides an interface for a user to interact with a culture of brain cells, which are cared for in a distant laboratory. As the virtual cells are 'touched', an electrical signal is sent to the actual neurons in the laboratory. The cells then respond with changes in activity that may result in the formation of new connections. The user experiences this visually in real time, enabling interaction between the user and cell culture as part of a closed loop of interaction through Neuroscope.

Describing and discussing systems

above: Mark describes how cells can control a mobile robot - watch film (Quicktime 7 is required for films)

above: Discussing alternative uses for the this system - watch film

“The idea is that the culture is in charge of it's own behaviour so the entire system is one big self-referencing loop. This is the robot moving around under control of the culture so when you see it make another change in direction, that's because something has changed in the culture, so at one electrode cells are firing at a slightly higher frequency, which makes it go to the right.”

Diagrams and behaviours

above: Following the discussion, a hypothetical timescale of products with embodied cells (larger version)

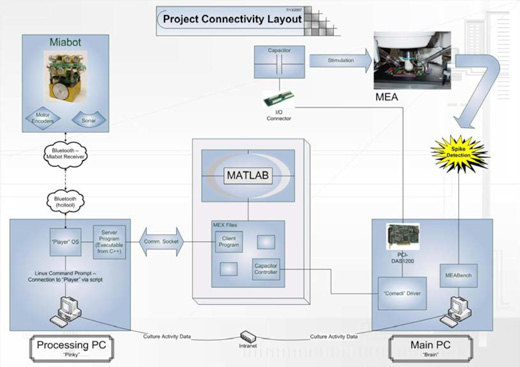

above: Dimitris' diagram of this 'closed loop' control system

above: Elio's diagram of the Neuroscope system architechture

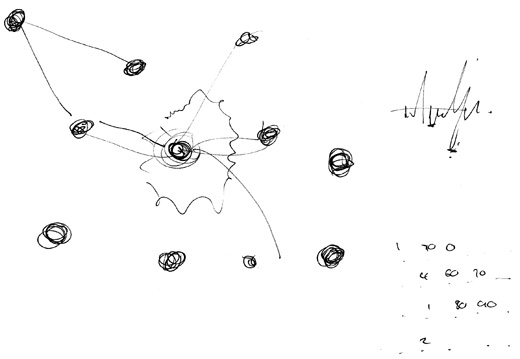

above: A sketch showing how individual cells communicate

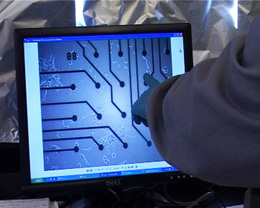

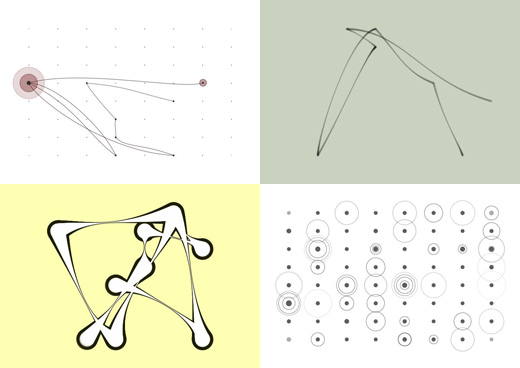

above: David used Processing to build the interaction viewable inside Neuroscope

Animated versions of the Neuroscope interface can be seen here and here. The final version is displayed on a personal media player inside Neuroscope, and a controller on the side of the device allows the cells to be selected.

Building the protoype

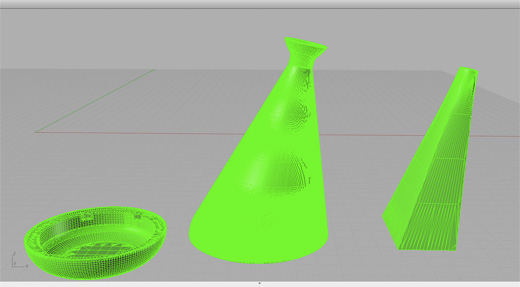

above: Modelling parts in Rhino

above: Assembling the prototype

above: The finished object